From Markdown to Training Data

Empowering Website Conversations: Part 2

Introduction

In the previous section we went over what a chatbot is and the valuable support they can provide. We also went through the required steps to obtain an OpenAI API KEY that we will use in the rest of the series. In this section, we will discuss the importance of converting our text into a usable dataset and take the first step in creating our own custom chatbot with OpenAI’s fine-tuning API by creating a question answer dataset built off of our Markdown website files. This dataset will be used to create the prompts and expected completions used to train the fine-tuned model.

Part 1: What are Chatbots, and why would I want one?

The process used to create the question and answer pairs is based on the OpenAI Cookbook example and will require an OpenAI API key.

Why do I need to clean my data?

We are going to be focusing on cleaning and formatting Markdown files into a usable dataset to be used with the OpenAI’s completion API. But regardless of the type of text you are planning to use, from Markdown, HTML, or just plain text, there will be some amount of cleaning and reformatting required to feed it into a machine learning model.

How this data is formatted and cleaned is highly dependent on the specific task you are working on.

If your goal is to tidy up Markdown for text analysis, several actions could enhance its quality. You might choose to segment each word into a token, exclude digits, symbols, and punctuation, transform the entire text into lowercase, and omit frequently used words, also termed “stopwords.” By doing so, you filter out extraneous content, unify words regardless of their capitalization, and efficiently trim the dataset by eliminating less significant details. In essence, these steps refine the text, enhancing its relevance and streamlining its content for more effective analysis.

For our task, we don’t actually want to take all of those steps. This is because we are planning to use the OpenAI Completions API which is trained to take our text data in its natural language form. But we still want to do some common operations. Our website is made up of Markdown files that get converted into a static site with Jekyll. Therefore, our task involves extracting content from these Markdown files, removing both Markdown and HTML formatting, and segmenting files into more manageable sections while preserving their appropriate context.

Fine-tuning training data format

The end goal of this article is to have a question and answer dataset that is built of the Markdown files of the Embyr website that will enable us to easily create the fine-tuning training dataset. Fine-tuning requires at least a couple hundred examples of prompt and completion combinations.

When using gpt-3.5-turbo as your base model, the training data must be in the

following conversational format.

{"messages": [

{"role": "system", "content": "<Role of the model>"},

{"role": "user", "content": "<User question or prompt>"},

{"role": "assistant", "content": "<Desired generated result>"}

]}

{"messages": [

{"role": "system", "content": "<Role of the model>"},

{"role": "user", "content": "<User question or prompt>"},

{"role": "assistant", "content": "<Desired generated result>"}

]}

{"messages": [

{"role": "system", "content": "<Role of the model>"},

{"role": "user", "content": "<User question or prompt>"},

{"role": "assistant", "content": "<Desired generated result>"}

]}

If using you are using babbage-002 and davinci-002 the following completions

format is used:

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

For our use case, we will use gpt-3.5-turbo we want the message format to be

the following:

{"messages": [

{"role": "system",

"content": "You are a factual chatbot to answer questions about AI and Embyr."},

{"role": "user", "content": "<QUESTION>"},

{"role": "assistant", "content": "<ANSWER> **STOP**"}

]}

Import things to note:

- STOP is used as a fixed stop sequence to tell the model when a completion should end.

- The prompt format should be the same one that will be used later when using the models.

For more information review the best practices for preparing your dataset.

Create a Context for Generating Questions

For generating the JSON, we will need a dataset of question and answer pairs; The pairs could be generated by hand but I made use of the OpenAI completion route to generate the initial set of questions.

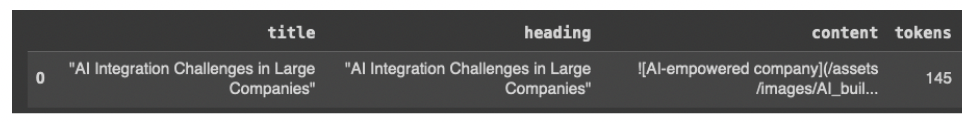

I started by converting the Markdown files from our website into a structured dataset. This dataset is organized into sections based on headers within the content. Each entry encompasses a title, heading, content, and the count of tokens present in the content section.

The above fields will be used to create a “context” that can be passed to the OpenAI completion route to be used to generate questions. The prompt that will be used is:

"Write questions based on the text below\n\nText: {context}\n\nQuestions:\n1.",

The following was done in Google Colab.

Import python libraries

The following libraries will be used:

- glob: to get the list of Markdown files to extract the columns from. This includes all pages and current blog posts.

- os: read and write the files and database

- re: regular expression operations used to remove HTML comments

- tiktoken and GPT2TokenizerFast: used to count number of tokens and reduce section size

- pandas: Used to build the custom dataset

# set up imports

import glob

import os

import re

import io

import tiktoken

from transformers import GPT2TokenizerFast

import pandas as pd

Get the website files, including all source Markdown files and blog posts

We are building a chatbot that can answer questions about Embyr and the website so we want the training data to include related content from the main pages of our website as well as the blog posts we have written to provide well rounded answers.

# Get a list of the files we care about

files_to_use = glob.glob("website/*/*.md")

files_to_use += glob.glob("website/*.md")

files_to_use.sort()

The above gave us the following list of files:

['website/_posts/2023-03-21-ai-integration-challenges.md',

'website/_posts/2023-03-21-regtech-and-ai.md',

'website/_posts/2023-03-22-overcoming-organizational-resistance.md',

'website/about.md',

'website/blog.md',

'website/careers.md',

'website/contact_us.md',

'website/index.md',

'website/possibilities.md',

'website/services.md']

Extract and clean the data

We need to remove any Markdown or HTML syntax, including the front matter, from the Markdown files before parsing the Markdown files into a dataset. The files will be separated into sections by title and headers.

The following code will do the following cleaning and parsing of each Markdown

page and then save it off into a pd.DataFrame:

- Skip Markdown FrontMatter, extracting the page title

- Ignore HTML lines

- Ignore Markdown CSS class lines

- Extract headings and its following content

- Clean the content:

- Remove any HTML comments

- Remove starting and ending whitespace

- Replace double spaces with a single space.

This above list is not exhaustive of all the steps you may need to take. It is important to do a review of your own text to see if additional steps are required.

## setup function to clean the docs

html_comment = re.compile("<!\-\-.*\-\->")

def clean_section(text):

# Put text cleaning steps here

text = text.strip()

text = re.sub(html_comment, "", text)

text = text.replace(" ", " ")

return text

def parse_doc(path):

# Divide doc into sections, using headers to split sections

# Ignore html and some Markdown

skip_section = False

content = []

section = ""

title = ""

heading = ""

file = open(path, 'r')

for line in file:

line = line.strip()

# skip front matter sections

if line.startswith("---"):

skip_section = not skip_section

continue

if skip_section:

if line.startswith("title:"):

title = line[len("title:"):].strip()

heading = title

continue

elif line.startswith("<") or line.startswith("{"):

# Skip html lines and markdown css configuration

continue

elif line.startswith("#"):

if section != "":

# Store last section and reset

content.append((title, heading, clean_section(section)))

section = ""

heading = ""

# Start new section

heading = line.replace("#", "").strip()

else:

# Add line to section

section = section + " " + line

# Store last section

content.append((title, heading, clean_section(section)))

return content

website_contents = []

for path in files_to_use:

contents = parse_doc(path)

website_contents.extend(contents)

website_contents = pd.DataFrame(website_contents, columns=["title", "heading", "content"])

Make sure no section is too long to submit

Next step is to make sure that no section is too long to be able to submit to the completions API. If a section is too long, shorten it to a maximum size.

I had used text-davinci-001 in the following completions which had a max token

length of 2,049 tokens. I would suggest moving to text-davinci-003 which has

a max of 4,097 tokens or gpt-3.5-turbo-instruct if it is now available.

# Make sure no section is too long (longer then token limit)

tokenizer = GPT2TokenizerFast.from_pretrained("gpt2")

def count_tokens(text: str) -> int:

"""count the number of tokens in a string"""

return len(tokenizer.encode(text))

def reduce_long(

long_text: str, long_text_tokens: bool = False, max_len: int = 590

) -> str:

"""

Reduce a long text to a maximum of `max_len` tokens by potentially cutting at

a sentence end

"""

if not long_text_tokens:

long_text_tokens = count_tokens(long_text)

if long_text_tokens > max_len:

sentences = sent_tokenize(long_text.replace("\n", " "))

ntokens = 0

for i, sentence in enumerate(sentences):

ntokens += 1 + count_tokens(sentence)

if ntokens > max_len:

return ". ".join(sentences[:i]) + "."

return long_text

MAX_SECTION_LEN = 1500

SEPARATOR = "\n* "

ENCODING = "gpt2" # encoding for text-davinci-003

encoding = tiktoken.get_encoding(ENCODING)

separator_len = len(encoding.encode(SEPARATOR))

f"Context separator contains {separator_len} tokens"

# count tokens in website content

website_contents["tokens"] = [len(encoding.encode(x)) for x in website_contents["content"]]

# we don't currently have any sections that are too large so we don't need to

# split any up, but if you do use reduce_long to shorten the section

website_contents.loc[website_contents.tokens > 1500]

Save off the database we just created

We will use this file in the next section.

# Save the database

if not os.path.exists("data"):

os.makedirs("data")

website_contents.to_csv('data/embyr_website_sections.csv', index=False)

Load the data and form context that combines titles, heading and the content

The context that is created is going to be used to generate the questions.

## Add a context of combined title/heading/content

import pandas as pd

df = pd.read_csv('data/embyr_website_sections.csv')

df['context'] = df.title + "\n" + df.heading + "\n\n" + df.content

df.head()

Generate Questions

Using our new dataset, we will use text-davinci-001 to create an initial set

of questions based off of the Embyr website.

Replace

text-davinci-001withtext-davinci-003orgpt-3.5-turbo-instructif it is available. Please take a look model availability announcement.

Generate Questions for each section

Note: This is very expensive

Make sure to set OPENAI_API_ORG and OPENAI_API_KEY environment variables. We

are going to use the completions

API with the

following parameters:

-

temperature=0

Reduce the amount of randomness used, this should give us the most deterministic results.

-

max_tokens=257

The max number of tokens that can be returned

-

stop=[“\n\n”]

The stop token that is built into the prompt

Complete completion API documentation

# Generate questions for each section

# NOTE THIS IS EXPENSIVE

try:

import openai

except:

!pip install openai

import openai

import pandas

def get_questions(context):

if pandas.isna(context):

return ""

try:

response = openai.Completion.create(

engine="text-davinci-001",

prompt=f"Write questions based on the text below\n\nText: {context}\n\nQuestions:\n1.",

temperature=0,

max_tokens=257,

stop=["\n\n"]

)

return response['choices'][0]['text']

except Exception as e:

print (e)

return ""

openai.organization = os.environ.get("OPENAI_API_ORG")

openai.api_key = os.environ.get("OPENAI_API_KEY")

# This is expensive don't rerun

df['questions']= df.context.apply(get_questions)

df['questions'] = "1." + df.questions

print(df[['questions']].values[0][0])

# remove any NaN questions

df.loc[pandas.isna(df["context"]), 'questions'] = None

Generate Answers

Using our dataset and generated questions, we will use text-davinci-001 to

create an initial set of answers for the questions based off of the Embyr

website.

Replace

text-davinci-001withtext-davinci-003orgpt-3.5-turbo-instructif it is available. Please take a look model availability announcement.

Generate Answers for each section

Now that we have a list of questions generated, we will generate an answer for each one.

Note: This is very expensive.

def get_answers(row):

if row.questions is None:

return ""

try:

response = openai.Completion.create(

engine="text-davinci-001",

prompt=f"Write answer based on the text below\n\nText: {row.context}\n\nQuestions:\n{row.questions}\n\nAnswers:\n1.",

temperature=0,

max_tokens=257,

)

return response['choices'][0]['text']

except Exception as e:

print (e)

return ""

openai.organization = os.environ.get("OPENAI_API_ORG")

openai.api_key = os.environ.get("OPENAI_API_KEY")

# This is expensive DON'T RERUN

df['answers']= df.apply(get_answers, axis=1)

df['answers'] = "1." + df.answers

df = df.dropna().reset_index().drop('index',axis=1)

df.head()

Save off the QA dataset

# save QA database

df.to_csv('data/embyr_website_qa.csv', index=False)

Review the Dataset

Now that we’ve got the dataset, it’s time to give it a little makeover. Using it as it might not lead us to the best results.

For example we forgot to remove images when cleaning our Markdown files! A blog post has a image of a nebula so the following questions were generated:

| Title | Heading | Content | Token | Context | Questions | Answers |

|---|---|---|---|---|---|---|

| The Executive’s Guide to ChatGPT | “The Executive’s Guide to ChatGPT” | !a nebula in space | 23 | “The Executive’s Guide to ChatGPT” | 1. What is a nebula? 2. What does a nebula look like? 3. What is the significance of a nebula? | 1. A nebula is an interstellar cloud of gas and dust. 2. A nebula can look like a variety of different things, including gas clouds, star-forming regions, and stellar nurseries. 3. Nebulae are significant because they are the birthplace of stars and planets. |

Because we generated our training data with OpenAI, it is very important that we review them. Think of it like tidying up a room before guests arrive. We’re decluttering, organizing, and making sure everything is in its right place. By doing this, we’re left with a dataset that’s refined and ready to impress.

But here’s where it gets interesting – it’s not just about cleaning. We’re also adding a dash of variety. We should introduce new questions and answers, spicing things up to make our dataset a dynamic conversational powerhouse. Whether it’s geeky tech talk or casual chit-chat, our dataset will cover it all. The more question/answer pairs you have the better the resulting models will work. After this review, I had more than 400 questions ready for training.

Conclusion

In this section we have covered the importance of data cleaning, how to convert markdown files into an initial dataset and how to use that data to generate a bunch of question and answers pairs that we will use to fine-tune our model for our chatbot, as well as the importance of taking the time to review the generated content manually.

In the next section, we are ready to train our first model! We will utilize our generated question and answers to create the json file required to use the OpenAI command line tool to create our QA model that will be the core of our chatbot. Once we have the model it is time to play and become familiar with how well our model works and what improvements we may want to make.

Part 1: What are Chatbots, and why would I want one?

Part 3: Fine-tune a Chatbot QA model

References

Olympics example from OpenAI Cookbook